A modern 365 workspace

optimised for lawyers

Choose Cicero and experience the future of legal software.

About us

Cicero: safe and efficient

Optimize your legal practice with Cicero, the innovative software solution that offers an advanced and secure working environment within Microsoft 365.

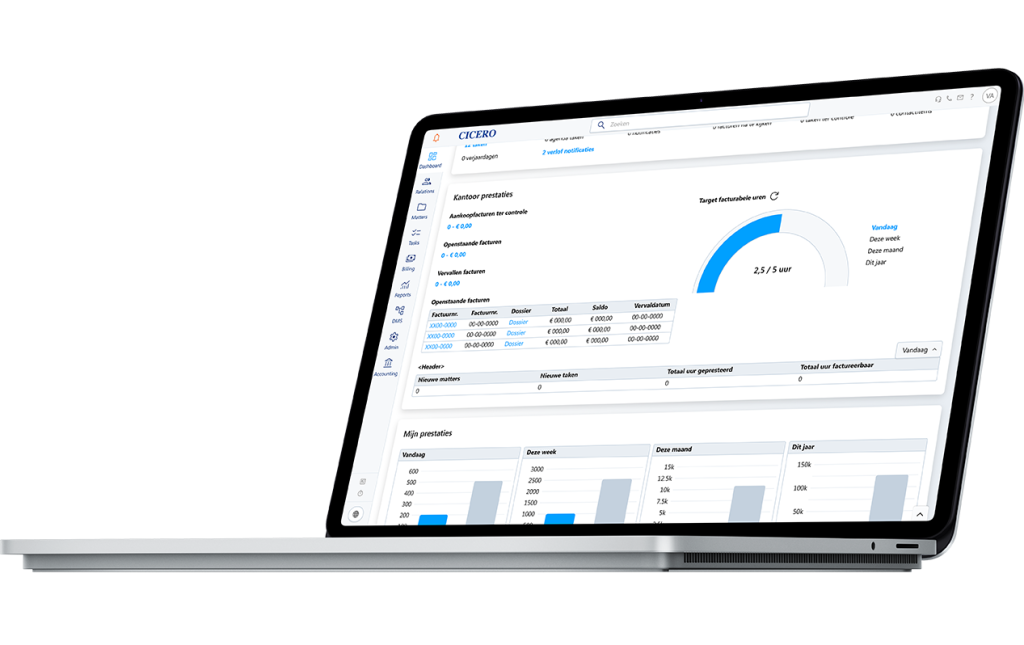

Tailored for lawyers

Designed to meet the specific needs of legal professionals

The package is very logically structured and consists of several basic applications that are interconnected.

-

Easily manage your relationships

-

Quickly create and edit files

-

Register performances

-

Create and monitor (e-)invoices

-

Keep comprehensive records and calculate interests

-

Send out mailings and invitations

-

Generate auto-filled documents

-

Define case workflows per employee and lawyer

Communication and file sharing via Teams

Each case is supported by an automatically created SharePoint site and Teams channel, which increases efficiency and reduces email traffic. In addition to secure collaboration, communication with clients can also be easily facilitated. Clients can view parts of the case and upload files via Teams, which offers flexibility and is convenient as many clients already use Teams.

Document management in Sharepoint

Files for which the user has access rights are also accessible via SharePoint or MS Office, which increases reliability and accessibility. Document management takes place in SharePoint, ensuring that files remain available even in the event of issues with Cicero, and guaranteeing version control and security.

How we work

Software tailored for lawyers

A solution specifically designed for lawyers. With over 40 years of experience and continuous feedback from our loyal customers.

Integration with Microsoft 365

We strive to stay ahead in meeting the changing needs of a modern lawyer.

We have transformed Cicero from on-premise software to the Cloud. Client and case data are hosted in your office's M365 environment. As a result, data is most secure and always accessible.

We are convinced that this solution offers the most opportunities in terms of innovation and integrations with other favorite tools.

Optimise your practice with Cicero and Copilot

Efficiently draft new contracts and legal documents based on existing templates and examples.

Quickly summarize key points and clauses, and gain insights into complex legal texts.

Advanced search capabilities allow you to directly search for specific clauses or terms within all your legal documents.

Ensure that all your documents comply with relevant regulations and company policies.

Save time by automating routine tasks such as drafting standard letters.

Functionalities

Cicero brings everything a lawyer needs together

We understand the challenges and needs of today's legal professionals. In addition to Cicero's advanced features, we integrate with "best of breed" software applications for added flexibility and efficiency.

Integrations

Some of our favourite integrations

Cicero excels in providing integrations with "best of breed" tools, allowing lawyers easy access to the best solutions available in the software market

Testimonials

Feedback from our loyal clients

serves as the driving force for our continuous improvement.

With a network of over 3000 clients, we foster strong and effective collaboration

All modules are interconnected, which makes it possible to work quickly and efficiently. It suffices to enter personal informations only once and all other modules integrate seamlessly. I am very positive about the cooperation with Cicero. We work closely together. I strongly feel that we are on the same wavelength. If we formulate some comments about functional aspects of the package, they respond to that and take it into account as they make their adjustments to the software.

Wouter van Cutsem DefensisThe program has numerous assets. The possibilities with regard to invoicing alone already save me two full days per month. The follow-up, too, is fully automated. Add to that the fact that you can know, at any time, what the exact turnover of the firm is. Cicero, therefore, is the perfect management tool for law firms.

Herwig Hemmerechts Hemmerechts & Van Laethem bvbaTry it for yourself

Manage your office with Cicero

Let's have a conversation and explore the possibilities

It's not just the follow-up of debt collection cases, but the overall ease of use: the system comes with loads of templates, but it's just as easy to create your own. Whenever you give a call or send an email, you're assisted immediatedly.

Ann Vandermotten Gaius Advocaten